GCP Data Engineer Roles and Responsibilities

In this article, we will look into all the essential details about being a Google Cloud Platform (GCP) Data Engineer. We’ll cover everything from core responsibilities and necessary skills to the tools and best practices for managing data on GCP.

Contents

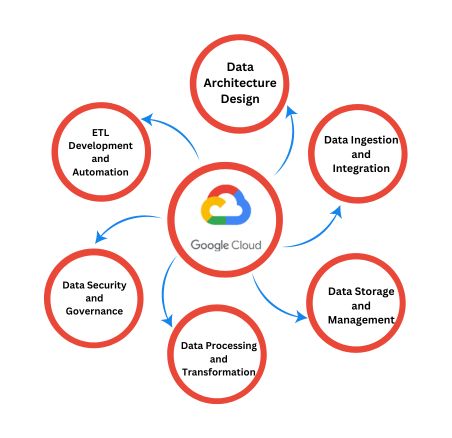

A GCP Data Engineer is responsible for GCP Data Engineer roles and responsibilities, including designing, building, and maintaining data processing systems on Google Cloud Platform. They ensure that data is accessible, reliable, and efficiently managed to support analytics and business decision-making. Their role involves a broad range of tasks, from data integration to pipeline optimization, and they work closely with other teams to ensure the integrity and usability of data.

Data Integration

Data Engineers integrate various data sources into a unified data warehouse or data lake. They use tools like Cloud Dataflow, Cloud Dataproc, and Cloud Pub/Sub to streamline the ingestion, transformation, and storage of data.

Data Pipeline Development

Creating and managing data pipelines is a core responsibility. This involves designing workflows that automate the movement and transformation of data, ensuring they are efficient and scalable. Tools such as Apache Beam and Dataflow are often employed.

Data Storage Management

Data Engineers must select and manage appropriate storage solutions, such as BigQuery, Cloud Storage, and Cloud SQL. They ensure that data is stored in a manner that balances performance, cost, and reliability. 3dd

Data Transformation

They transform raw data into a format that can be easily analyzed. This includes tasks like data cleansing, aggregation, and enrichment using tools like Dataflow and Dataprep.

Performance Optimization

Optimizing the performance of data processing systems is crucial. Data Engineers regularly monitor system performance, identify bottlenecks, and implement solutions to improve speed and efficiency.

Security and Compliance

Ensuring data security and compliance with regulations is a critical responsibility. Data Engineers implement best practices for data encryption, access control, and auditing to protect sensitive information.

Collaboration and Communication

Effective communication with stakeholders, including data scientists, analysts, and business leaders, is essential. Data Engineers translate technical details into business insights, ensuring that data solutions align with organizational goals.

Monitoring and Troubleshooting

Data Engineers are responsible for setting up effective monitoring systems to track the health and performance of data pipelines and storage solutions. They use tools like Stackdriver for logging and monitoring, enabling them to quickly identify and resolve issues to ensure data integrity and availability.

What is GCP Data Engineer?

A Google Cloud Platform (GCP) Data Engineer specializes in building and managing data processing systems within the GCP ecosystem. They utilize a suite of cloud-based tools and services to design scalable, efficient, and secure data architectures. This role is important in transforming raw data into meaningful insights that drive strategic decision-making across organizations. GCP Data Engineers are skilled at handling complex data integration tasks, ensuring that data from various sources is seamlessly ingested, transformed, and stored for analysis.

In addition to technical expertise, GCP Data Engineers play a crucial role in ensuring data quality and reliability. They implement best practices for data governance, maintain data pipelines, and monitor system performance to prevent disruptions. Their work often involves collaborating with data scientists, analysts, and other stakeholders to align data solutions with business needs. By focusing on performance optimization, security, and compliance, GCP Data Engineers help organizations maximize the value of their data while maintaining a reliable data infrastructure.

What Does a Data Engineer Do?

- Data engineers establish the groundwork for a database and its architecture, evaluating diverse requirements and applying pertinent database techniques to craft a resilient structure.

- Subsequently, they initiate the implementation process, constructing the database from the ground up.

- At regular intervals, data engineers conduct testing to detect and address any bugs or performance issues.

- The ongoing responsibility involves maintaining the database, ensuring seamless operation, and preventing disruptions.

- In situations where a database malfunction occurs, it can bring the associated IT infrastructure to a standstill, highlighting the indispensable role of a data engineer.

- Their expertise is particularly vital in managing large-scale processing systems, necessitating continuous attention to performance and scalability concerns.

- Moreover, data engineers contribute to the data science team by devising dataset procedures that facilitate data mining, modeling, and production. This active involvement significantly enhances the overall quality of the data.

What are the Skills Required to Become a GCP Data Engineer?

A Cloud Data Engineer requires a combination of technical and soft skills to design, implement, and manage data solutions in a cloud environment. Here are some key skills that a Cloud Data Engineer needs:

Technical Skills

- A solid command of scripting and programming languages, such as Python, Java, Scala, and C++.

- Proficiency in Cloud Platforms (AWS, Azure, GCP).

- Knowledge of Big Data Technologies (Hadoop, Spark).

- Experience with Data Warehousing (Redshift, Synapse, BigQuery).

- Competence in Database Management (SQL, NoSQL).

- Understanding of data warehousing and data modeling principles.

- Familiarity with UNIX and GNU/Linux systems.

- Ability to maintain ETL processes operating on various structured and unstructured sources.

- Familiarity with Kafka for handling real-time data feeds.

- Knowledge of using Kafka in conjunction with Hadoop.

- Understanding of data structures and algorithms.

- Troubleshooting and optimization skills.

Soft Skills

Some key soft skills include:

- Effective Communication.

- Comprehensive problem-solving abilities.

- Adaptability.

- Analytical skills.

- Critical thinking ability.

- Capacity to work both independently and as part of a team.

- Strong social skills.

- Innovation.

- Proactiveness.

- Decision-making skills.

- Observational skills.

Who can become a GCP Data Engineer?

Anyone with a background in data engineering, computer science, or a related field can become a Google Cloud Platform (GCP) Data Engineer. The typical path involves a combination of education, hands-on experience, and the development of specific technical skills.

- Educational Background: A bachelor’s degree in computer science, information technology, or a related field is usually required. Advanced degrees or certifications in data engineering or cloud computing can provide a competitive edge.

- Technical Skills: Proficiency in programming languages such as Python, Java, or SQL is essential. Familiarity with data processing frameworks like Apache Beam, Hadoop, or Spark, and experience with GCP tools such as BigQuery, Cloud Dataflow, and Cloud Storage are crucial.

- Understanding of Cloud Computing: In-depth knowledge of cloud computing principles and GCP services is necessary. This includes understanding how to deploy, manage, and optimize data solutions on GCP.

- Data Management Experience: Practical experience in data modeling, ETL (Extract, Transform, Load) processes, and database management is important. This includes working with relational and non-relational databases, as well as data warehousing solutions.

- Analytical Skills: Strong analytical and problem-solving skills are required to design efficient data processing systems and troubleshoot issues. Data Engineers must be able to analyze complex data sets and derive meaningful insights.

- Certification: Obtaining a GCP Data Engineer certification can validate your skills and knowledge, making you a more attractive candidate to potential employers. Certification programs typically cover data engineering best practices and GCP-specific tools.

- Experience with Data Security and Compliance: Understanding data security best practices and compliance requirements (such as GDPR or HIPAA) is essential. Data Engineers must ensure that data is protected and handled in accordance with legal standards.

- Soft Skills: Strong communication and teamwork skills are important for collaborating with other team members and stakeholders. The ability to explain technical concepts to non-technical audiences is also valuable.

GCP Data Engineer Job Description

The primary responsibility of a data engineer involves gathering, overseeing, and transforming raw data into interpretable information for data scientists and business analysts. Their ultimate objective is to ensure data accessibility, empowering organizations to leverage data for performance evaluation and optimization.

They work with various GCP tools like BigQuery, Cloud Dataflow, and Cloud Storage to store, process, and analyze data. Ensuring data quality and reliability is a key part of the job, as well as optimizing the performance of data systems to make sure they run smoothly and cost-effectively.

Additionally, a GCP Data Engineer collaborates closely with data scientists, analysts, and other stakeholders to understand data needs and deliver solutions that support business goals. They also focus on data security, implementing measures to protect sensitive information and ensure compliance with regulations.

Job Roles for GCP Data Engineers

Data Engineer

As a Google Cloud Professional Data Engineer, the role involves designing and constructing data processing systems. Collaboration with other data experts, including data scientists and analysts, is essential to identify criteria and implement data solutions.

Data Analyst

Google Cloud Expert Data Engineers as data analysts - This role includes creating dashboards, building data models, and conducting statistical analysis.

Big Data Analytics

Big Data Analytics develops scalable data processing and analytics pipelines using technologies like BigQuery, Dataflow, and Pub/Sub, enabling organizations to derive valuable insights from massive datasets.

Data Solution Architecture

In Data Solution Architecture roles, GCP Data Engineers design end-to-end data solutions, analyzing business requirements and creating scalable architectures using GCP services.

Machine Learning Engineer

Google Cloud Professional Data Engineers can also work as machine learning engineers. They are responsible for planning and constructing models for machine learning on the Google Cloud Platform.

Cloud Architect

Qualified Google Cloud data engineers may serve as cloud architects, creating and implementing cloud-based programs for their organizations. In this role, they decide which Google Cloud Platform services best suit the organization's requirements and set them accordingly.

DevOps Engineer

GCP Data Engineers, with knowledge of DevOps practices can work as DevOps Engineers. Bridging the gap between development and operations, they ensure the smooth deployment, operation, and maintenance of data solutions. Collaborating with development teams, data engineers, and IT operations, they build robust and scalable data pipelines, implement continuous integration and deployment practices, and optimize system performance.

Conclusion

In today’s data-driven world, the demand for data engineers is substantial and is expected to grow further. Businesses increasingly rely on data for informed decision-making, creating a growing need for experts capable of developing and maintaining data processing systems.

To enhance career prospects and income potential, data engineers can pursue certifications like the Google Cloud Professional Data Engineer certification, offering a valuable edge in a competitive job market.

In conclusion, the outlook for data engineers is positive, with numerous opportunities for career advancement and competitive pay. Those in this dynamic field can ensure success by staying ahead of industry trends by obtaining the necessary training and credentials, as well as continuously advancing their expertise through professional development.

FAQ'S

GCP Data Engineer Roles and Responsibilities

- Design and deploy data pipelines by leveraging GCP services like Dataflow, Dataproc, and Pub/Sub.

- Develop and sustain data ingestion and transformation processes utilizing tools such as Apache Beam and Apache Spark.

- Establish and manage data storage solutions using GCP services, including BigQuery, Cloud Storage, and Cloud SQL.

- Construct and deploy machine learning models using GCP’s AI Platform and TensorFlow.

- Implement data security measures and access controls through GCP’s Identity and Access Management (IAM) and Cloud Security Command Center.

- Monitor and resolve issues in data pipelines and storage solutions using GCP’s Stackdriver and Cloud Monitoring.

- Collaborate with data scientists and analysts to comprehend their data requirements and offer tailored solutions.

- Automate data processing tasks through scripting languages like Python and Bash.

- Engage in code reviews and contribute to the development of best practices for data engineering on GCP.

- Stay current with the latest GCP services and features, evaluating their potential application in the organization’s data infrastructure.

Yes, data engineers code. Their role involves coding to construct the infrastructure that allows organizations to store, process, and analyze extensive datasets. Commonly using programming languages like Python, SQL, Java, or Scala, they develop data pipelines and ETL (Extract, Transform, Load) processes. These processes involve extracting data from diverse sources, transforming it into the desired format, and loading it into a data warehouse or data lake.

- Programming Skills:Python, SQL, and Java

- Data Modeling

- Database Management

- ETL (Extract, Transform, Load)

- Big Data Technologies:Hadoop, Spark, and Kafka

- Cloud Computing:AWS, Azure, or Google Cloud

- Collaboration and Communication Skills

- Problem-Solving and Analytical Skills

A GCP Data Engineer resume should ideally be limited to two pages, emphasizing recent experiences, relevant skills, and achievements that demonstrate proficiency in GCP and results-driven capabilities. Use concise language, bullet points, and quantify accomplishments where possible. Tailor your resume for each job application, focusing on skills and experiences aligned with the specific GCP Data Engineer role while adhering to the two-page limit.

Data Engineer:

- Focus: Data engineers primarily focus on the development and maintenance of the systems and architecture that allow for the effective collection, storage, and retrieval of data.

- Responsibilities: They are responsible for designing, constructing, and maintaining the infrastructure necessary for data generation, transformation, and storage.

- Tasks: Data engineers work on tasks such as building data pipelines, ensuring data quality and reliability, managing databases, and creating ETL (Extract, Transform, Load) processes.

- Skills: They typically have skills in programming languages (such as Python, Java, or Scala), database management, and knowledge of big data technologies.

Data Scientist:

- Focus: Data scientists concentrate on extracting insights and knowledge from data through advanced statistical analysis, machine learning, and predictive modeling.

- Responsibilities: They analyze complex datasets to identify patterns, trends, and correlations that can be used to inform business decisions and strategies.

- Tasks: Data scientists engage in tasks like developing machine learning models, creating data visualizations, and conducting in-depth statistical analyses.

- Skills: They possess expertise in programming (often in languages like Python or R), statistical analysis, machine learning, and domain-specific knowledge.

Collaboration: While data engineers and data scientists have distinct roles, they often work collaboratively. Data engineers create the infrastructure and pipelines that data scientists rely on to perform their analyses and generate insights.

Experienced data engineers at Google earn an average salary ranging from $150,000 to $350,000, complemented by top-tier benefits including health insurance, flexible work schedules, and a hybrid work culture.